OK leaving CCNP SWITCH aside for a bit, I finely got around to setting up Linux to allow me to run SDM.

I should point out that I am not a great fan of SDM, but I do run the IOS based firewall on one of my small networks. And while I am happy to change the config of policy’s from the command line, it can be hard to visualise what is going on in 600+ lines of code. So I do fall back to it every now and then.

On the above network I have 100% Linux machines, which included those sitting in the management subnet. So up utill now if I wanted to run SDM I had to get out a windows laptop and plug it in, so for a while I have been looking how I could get this up and running.

There is no Linux SDM version but you can install SDM either on the PC or on the router.According to CISCO as long as the web browser has jarva script enabled, then if you use the version installed on the Router it will work. However despite 2 different versions of Firefox, Google Crome, and numerous attempts with Java versions. Trying this way would always hang at the same point on all three of the PC’s I tried it from. I also don’t really like the idea of running SDM from the router, it takes up space and resources and is another thing to go wrong.

So the alternative was to attempt to run SDM from with in Linux. You will read on the web that CISCO SDM is a Java based html applications, and so in theory you can simple copy the install file across from windows to Linux, move a few files around, and then open up your web browser and point it to the “launcher.html” file you will find in the install directory. However my attempt at this again proved unsuccessful. (I am not sure if this was due to the incorrect Java version I did try with a few but SDM is very fussy with Java and Linux is not so happy with multiply Java versions. (see here for instruction s for this method)

So I decided to go the whole hog and experiment with WINE. Wine for those of you who don’t know is a platform that allows you to run native windows application with in LINUX, I like to think of it as a windows emulator, however some purists will tell you this is not quite correct. But what ever it will allow you to run many windows application on LINUX, and while some people may rebel at the idea of that, I am more of the opinion if it works and gets the job done, then I don’t really have a problem.

So setting it all up.

The first thing to do is add the wine repository ( ppa:ubuntu-wine/ppa) to you distribution. In Unbuntu this can either be done using the option settings in the graphical package manager software, or by running the following command.

sudo add-apt-repository ppa:ubuntu-wine/ppa

Then update the repository cache, (“sudo apt-get update” from the command line).

If you are running the GUI package manager, search for wine and tick the wine1.2 install (at time of writing this is the current stable version, you should pick the latest stable). or from the cli type

sudo apt-get install wine1.2

Wine will now be installed.

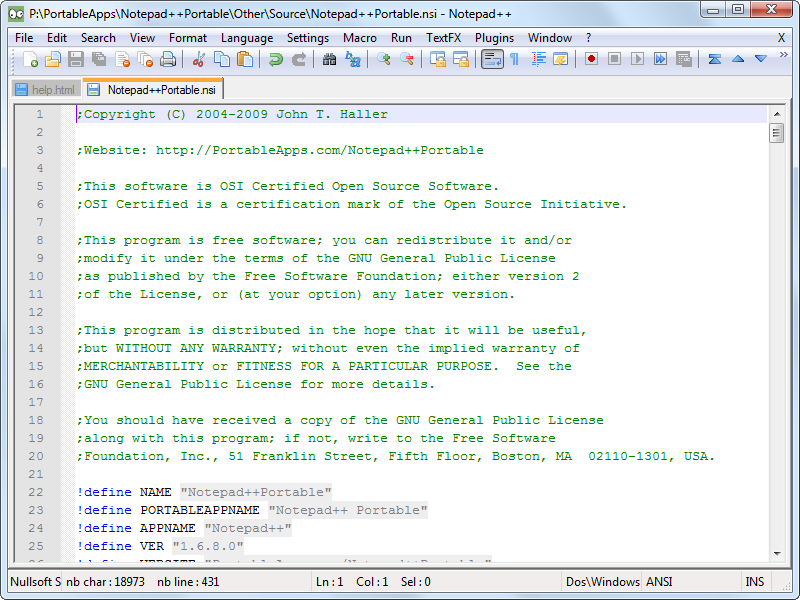

You now need to get hold of CISCO SDM, Firefox 3.5 (must be 3.5 this will not work with version 3.6 due to java issues), and a copy of JAVA 6 update 11 (make sure it is this exact version SDM is very very picky).

Once you have downloaded them all, you can simple open them in the GUI, you may get an error saying that they are not executable files. Linux by default will not allow a file to be executed unless it has been set to be allowed. If you get this message simple right click, go to the properties and tick the execute box under the permissions tab. You can also run “sudo chmod +x <filename>” to achieve the same.

You should not be able to run the setup and follow the install exactly as you would under windows. Once you have installed all three, check you can open Firefox. You can find this either up in the application menu under

Wine >> wine applications >> firefox

Or you should have an short cut on the desk top (you may need to make this short cut executable like above).

You will also have a SDM shortcut on the desktop, however this will bring up the WINE IE browser which does not work, so you can’t use this direct.

Instead open up the Firefox you have just installed, and in the address bar type “c:” and hit “return” / click go. This will bring up a folder list for the Wine created windows file system. Open “programs files” >> cisco systems >> SDM >> common files >> common files. Here you will find a file called Launcher.html which you want to open (I would also suggest add this as a short cut)

And there you are, CISCO SDM will now function as in windows, pop up boxes and all. You can even create a desktop icon that will pass the file above to Firefox if you wish.

Hope that’s of some help to people. If I get it running completely native with out the need for WINE I will be sure to let you know.

DevilWAH